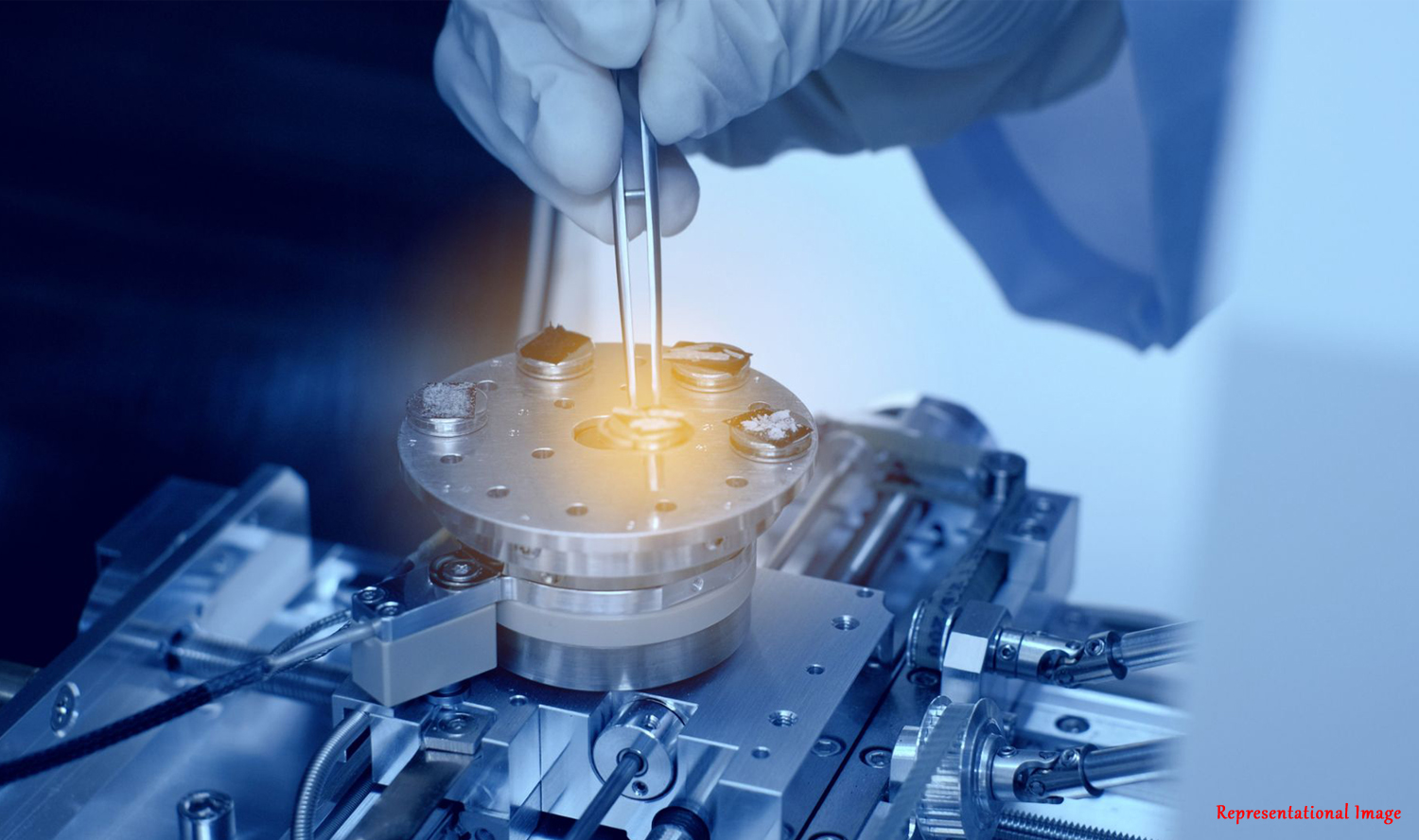

Quantum computers are generating a lot of interest nowadays. As of now, the quantum devices that have been used to demonstrate “quantum advantage” are small and noisy. There is a big push towards building larger, scalable and more accurate quantum computers.

Quantum bits (qubits), unlike classical bits are quite prone to noise – often called decoherence — that arises due to unavoidable physical interactions with their surroundings. To mitigate the effect of noise on quantum bits and quantum gates, quantum computers rely on the theory of quantum error correction and quantum fault tolerance, respectively. These provide a suite of methods for performing reliable quantum computing even with a noisy memory. The computation relies on encoding the information to be processed into a larger number of physical qubits using a quantum error correcting (QEC) code. These codes are designed and chosen in such a way where they can help in the removal of errors significantly.

While quantum computers continue to advance, traditional computing technology also evolves to meet growing demands. Graphics Processing Units (GPUs) have emerged as powerful tools for a wide range of computational tasks, including machine learning, scientific simulations, and rendering graphics. As quantum computers grapple with noise and scalability issues, GPUs offer a reliable and accessible alternative for high-performance computing needs.

Quantum fault tolerance guarantees that such QEC codes can be implemented to a high degree of accuracy even with noisy quantum gates. The performance of a fault tolerance scheme depends crucially on the noise in the quantum computing device. The standard schemes assume no knowledge of the noise in physical qubits. Thus, it was considered whether a fault tolerance scheme could be constructed that takes the dominant noise process affecting the quantum architecture, into consideration.

Dr. Akshaya Jayashankar

Prof. Prabha Mandayam

Mr. My Duy Hoang Long

Prof. Hui Khoon Ng

In this work, the authors including Dr. Akshaya Jayashankar and Prof. Prabha Mandayam from the Department of Physics, Indian Institute of Technology Madras, Chennai, India, Mr. My Duy Hoang Long and Prof. Hui Khoon Ng, from Yale-NUS College, Singapore, Singapore, focus their attention on an important noise model in today’s superconducting quantum processors, namely, amplitude-damping noise.

The authors develop a universal fault tolerance scheme that is tailored to amplitude-damping noise. A 4-qubit QEC code, which does not fall into the standard class of Pauli-noise-based QEC codes is used. An error correction gadget, preparation gadgets, measurement gadgets, and an encoded universal gate set are constructed. It is further shown that the logical controlled-Z (CZ) gate is a better choice of two-qubit gate for the universal gate set, rather than the usual controlled-NOT (CNOT) gate, as the former is a noise-structure-preserving gate and can be easily made fault-tolerant to amplitude-damping noise.

This paper presents the first step towards achieving fault tolerance against specific noise models. The next step would be to extend these fault-tolerant constructions to higher levels of encoding. The approach outlined here for developing noise-adapted fault tolerance schemes, provides a promising route to building reliable quantum processors with fewer physical resources.

Dr. Baladitya Suri from the Department of Instrumentation and Applied Physics, Indian Institute of Science, Bengaluru, India, explains at length the history and importance of quantum error correction by giving the following comments: “Quantum Error Correction (QEC) has been a dream that physicists — now called quantum engineers — have chased for two decades – a goal that makes the effort and money worth it. At the risk of over simplifying, it involves making an “error-corrected logical qubit’’ out of a few “physical” qubits as per a prescribed error-correction protocol, such that the logical qubit has fewer errors than its constituent physical qubits. Several theoretical protocols were developed, each of which requiring the constituent physical qubits to have errors below a threshold so that the computation using these logical qubits is fault-tolerant. If you look at superconducting qubits, the leading qubit variety in the world at the moment, the error-rate in each physical qubit is a factor ten thousand higher than what is required for fault-tolerance in some promising error correction protocols. In practical terms, this translates to requiring a few thousand physical qubits to realise one logical qubit. Compare this with the state-of-the-art number of physical qubits we have managed to put together after a mountainous effort – hundred — and the scale of the challenge becomes clear. There has been only one system, Schrodinger-cat qubits using high-quality superconducting cavities, where error-correction has even come close to break-even, wherethe logical qubit has at least the same errors as the best physical qubit. In my opinion, the Noisy Intermediate Scale Quantum (NISQ) era that we live in at the moment, is experimentalists’ Everest base-camp.

A part of the problem that makes QEC so difficult is that it is conventionally based on what are called Pauli errors, and the error thresholds etc. are calculated using them. But the noise affecting qubits in labs world over is not described entirely by such models. This raises the question if the asks of QEC are too steep for today’s qubits because the thresholds computed are unrealistic, since the underlying noise models don’t fully depict reality. This question has recently led to attempts to design QEC protocols tailored to biased-noise models, or in simpler terms, particular kinds of noise that actually affect a given system.” Dr. Baladitya Suri also acknowledges the importance of the work done by the authors of this paper by giving the following comments: “The recent article from Prof. Prabha Mandayam’s group, titled “Achieving fault tolerance against amplitude-damping noise” by Jayashankar et al., takes up the special case of a very commonly-found noise called amplitude-damping noise, and in the process develops tools that could help tackle other biased-noise models. They observe that the error thresholds for such tailored protocols are more realistic, while also noting that some native assumptions of conventional QEC need to be revised in tailored models. As an experimentalist who deals with this kind of noise in the lab every day, I believe this paper marks an important step in the direction of practically realisable QEC protocols.”

Article by Akshay Anantharaman

Here is the original link to the paper:

https://journals.aps.org/prresearch/abstract/10.1103/PhysRevResearch.4.023034