Selfies are a quite a ‘thing’ now-a-days. Often times your selfie put as your profile picture becomes the first thing that anyone sees and assumes your personality traits. Thus the new generation always tries to keep their selfie game up.

Since selfies have become so important in the world of photography, you must ask a few questions to yourself. Are you unable to take the perfect selfie in the dark? Try longer exposure — No! It causes motion blur; try Flash — No! It’s limited to objects only at an arm’s distance with the notorious color cast artifacts; why not use Infrared sensors — too costly! You have a tool to up your selfie game. Make way for the ‘Low-Light Light Field (L3F) camera.

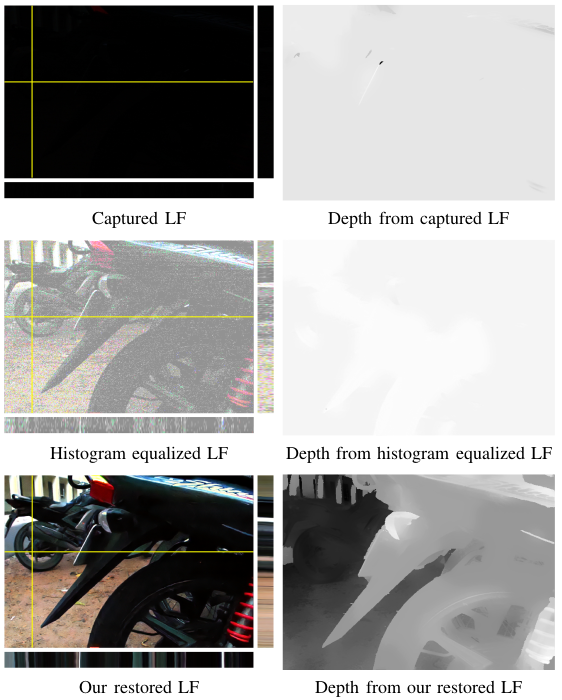

Of course, there are cameras which can take clear pictures in the dark. So what makes the L3F camera special? The L3F camera can accurately preserve the scene’s 3D structure even in the dark, while the previous cameras can only restore a 2D projection of the scene. The L3F camera achieves this by not only recording the total light intensity falling on the sensor (as in previous cameras) but also keeping a track of various angles of incidence, which is altogether neglected in the previous approaches. This means images are sharper and clearer.

The L3F camera is based on a two stage neural network. A neural network is an algorithm or an equation that is imbibed in a machine, in this case, a camera. The neural network helps the machine to think on its own. The two stages that it follows are:

Stage 1: Global Representation Block – The camera takes in all the light field views to encode the light field geometry.

Stage 2: View Reconstruction Block – The encoded information is used to reconstruct each light field view.

A Histogram module is also used to automatically tune the amplification factor. But why do we need to go through all this trouble to obtain a clear image? Aren’t there software tools to help reconstruct an image? Of course it can, but software tools like photoshop are not effective to touch-up pictures taken in the dark. Also there is a lot of noise with such tools that causes a disturbance in the clarity of the image.

A distinctive feature of the L3F algorithm, that makes it universally appealing, is its ability to restore both the 3D scenes and the conventional 2D photographs captured using the commonly used DSLR camera. For latter, the process is as follows:

1. The light field camera converts a DSLR camera image into what is known as a pseudo- LF which is not genuine as the name suggests.

2. This enhanced pseudo-LF is transformed back into a single-frame DSLR image in a lossless fashion.

Here the question arises, ‘Should a DSLR camera or a Light Field (LF) camera be used in the dark to take a picture?’ Of course DSLR cameras have high resolution, but an LF camera offers depth estimation and post-capture refocusing, which is not possible with a single DSLR camera snapshot. This makes the L3F camera appropriate for autonomous nighttime driving, robot navigation, etc. and it is suitable for both DSLR and LF images. While the L3F camera is specially optimized for LF it is seen that it maintains a decent restoration for single-frame DSLR images. Below are the images and depth of the image captured from Light Field camera.

Professor Rajiv Soundararajan of IISc Bangalore had this to say regarding the experiment: “The paper considers the problem of capturing low-light light fields through computational imaging techniques. The key challenge in the restoration of low-light light fields is the preservation of epipolar geometry constraints across views while simultaneously achieving enhancement for better perception of visual details. The main contribution of this work is in solving this challenge by designing a two-stage deep neural network architecture L3FNet, where the first stage encodes the visual geometry which is used in the second stage for image reconstruction. The authors show that such an approach produces visually pleasing restored light fields while simultaneously also recovering accurate depth maps due to the preservation of scene geometry.”

Professor Rajiv Soundararajan further adds: “In order to train and evaluate the performance of the algorithms, the authors design a novel and rich publicly available database of low-light light fields at different lighting conditions with corresponding ground truth images. The ground truth images allow for the training of L3FNet as well as a comparison of this method with several other methods to show the superior performance of L3FNet. Finally, the authors also establish a link between restoration of low-light light fields and restoration of individual low light images. It is shown that by shuffling any DSLR image to appear like a light field, L3FNet can be used to restore such a light field and then transform it back to a DSLR image. This approach leverages the larger receptive field available on account of the creation of the pseudo light field when compared to typical low light restoration deep networks.”

Prof.Kaushik Mitra

Mr. Mohit Lamba

Mr. Kranthi Kumar Rachavarapu

L3F camera opens up the possibilities of taking clear images in the dark. It is also adaptable with cameras such as DSLR ones. Special mention to the team that performed this study which includes: Professor Kaushik Mitra, Mr. Mohit Lamba, and Mr. Kranthi Kumar Rachavarapu.

Article by Akshay Anantharaman

Here is the link to the research article:

https://ieeexplore.ieee.org/document/9305989

Here is a special link to the project website. The website contains demos and access to the paper where people don’t need to be a member of IEEE organization to see it:

https://mohitlamba94.github.io/L3Fnet/