Vision and Language are two significant abilities of Human intelligence where the former is a large portion of how humans perceive the world, and the latter is how they communicate with the world. Hence, developing computers capable of performing both Visual perception and Language understanding is one of the central focuses of AI (Artificial Intelligence) researchers worldwide. Various AI problems that combine visual (Images, Videos, etc.) and language (Text) information are collectively called Vision-Language (V-L) tasks. These include tasks like :

1. Visual Question Answering (VQA): Answering questions about images/videos.

2. Visual Captioning: Generate textual descriptions of the image/video contents etc.

One of the major challenges for researchers to solve the V-L tasks is to match the Visual (Image/ Video) and Language (Text) information and find their relationship to each other. In short, these relations are called V-L correlations. Humans perform the V-L correlations with a strong linguistic level understanding of the visual information. For instance, consider the VQA problem to answer the question: “What kind of animal is sitting next to the person?” given an image of a cat sitting next to a person. It is quite easy for humans to interpret the linguistic relationship of the “cat” in the image with the “animal” in the question since we have a strong linguistic understanding of the relationship between the two words. However, it is a difficult task for a computer.

In this paper, Gouthaman KV et al propose a method called “Linguistically-Aware Attention (LAT)” that helps computers understand the V-L correlations while solving these tasks. In the paper, the authors show the effectiveness of LAT on three V-L tasks:

1. Visual Question Answering (VQA)

2. Counting-VQA

3. Image captioning

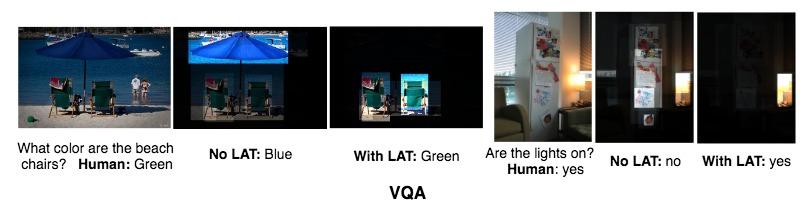

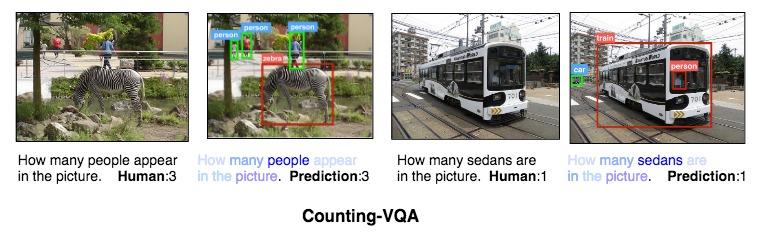

In all of these tasks, LAT is successful. In the paper, the authors have demonstrated that LAT helps computers perform in a more human-like manner. Some qualitative results from the paper are shown below:

In the above pictures, the results of the computer understanding the image both with LAT and without LAT are shown. For example, in VQA, a picture of a blue umbrella and two beach chairs are shown. The question asked is: “What is the colour of the beach chairs?” A human instantly recognizes that the question is asked for the color of the chairs, and he/she can easily predict the color as “green”. But the computer, without LAT, focuses on the umbrella instead of chairs and predicts the color “blue”, which is wrong. With LAT, the computer focuses on the chairs properly and says the colour correctly.

In captioning too with LAT the computer understands the images with more precision. For example, there is an image of a woman sitting on something with a cellphone. Without LAT, the computer generates the caption “A woman sitting on the ground with a cell phone”. But with LAT, the description is more accurate. The computer generates the caption “A woman sitting on a suitcase in the woods” which is more accurate.

Professor Alexandre Bernardino of the Instituto Superiro Tecnico, University of Lisbon had this to say on the above study: “This paper presents a new approach in the realm of language-based analysis of images. These problems mix visual (images) and text information to solve several artificial intelligence problems, for instance generate a textual answer to a textual query on a given image (Visual Question Answering – VQA) or generate a text description of the content of an image (image captioning). Classical methods encode the visual (V) and textual (Q) information in separate descriptors, that are later used to perform the analysis task. Visual object information is encoded using a visual descriptor (a.k.a. feature vector) representing appearance, shape and color. Words in the text information are encoded using a vector in a semantic space representing a kind of “similarity” between the concepts entailed in the words, in a process known as Word-to-Vector. “

Further, commenting on the work of Mr. Gouthaman KV et al “The team of researchers from the IIT Madras proposes to use an additional descriptor (L) based on a mixed visual-language representation: the names of the object classes detected in the image are converted to the linguistically rich representation provided by Word-to-Vector. This new descriptor, denoted Linguistically-Aware Attention (LAT), encodes the similarity between linguistically related objects. For instance, if the visual analysis of the image detects a “cat” and the text description refers to “animal”, classical methods struggle to bind the two concepts. However, by representing “cat” with Word-to-Vector, LAT creates an easy binding with the Word-to-Vector representation of “animal” because these are related semantic concepts. In essence, the output space of Word-to-Vector is proposed as the common ground to unify the visual and textual representations. The proposed method improves the state-of-the-art in visual question answering and image captioning in many challenging datasets.”

Regarding the impact of this study, Professor Alexandre Bernardino had this to say: “The research presented in the paper will have a major impact in the quality of application in various fields, for instance chatbots, search engines and human-computer interfaces. Together with the recent advances in speech recognition, we can actually provide robots with advanced linguistic skills to interact naturally with humans and show a high degree of intelligence as personal assistants and co-workers.”

This study could be a major breakthrough for producing machines with more human-like characteristics.

Article by Akshay Anantharaman

Here is the original link to the scientific paper:

https://www.sciencedirect.com/science/article/pii/S0031320320306154